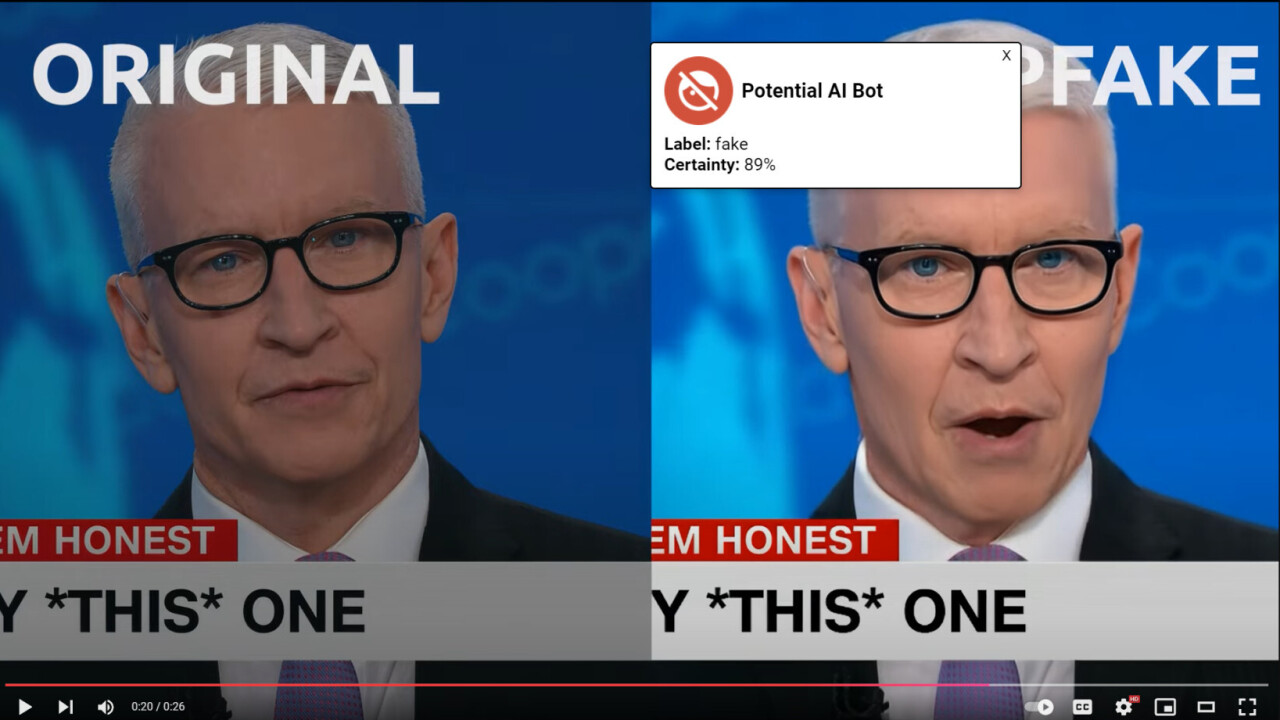

UK startup Surf Security has launched a beta version of what it claims is the world's first browser with a built-in feature designed to spot AI-generated deepfakes.

The tool, available through Surf's browser or as an extension, can detect with up to 98% accuracy whether the person you're interacting with online is a real human or an AI imitation, the company said.

The London-based cybersecurity upstart uses “military-grade” neural network technology to detect deepfakes. The system uses State Space Models, which detect AI-generated clones across languages and accents by analysing audio frames for inconsistencies.

“To maximise its effectiveness, we focused on accuracy and speed,” said Ziv Yankowitz, Surf Security's CTO. The tool's neural network is trained using deepfakes created by the top AI voice cloning platforms, he said.

The system has an integrated background noise reduction feature to clear up audio before processing. “It can spot a deepfake audio in less than 2 seconds,” said Yankowitz.

The new feature is available for audio files, including online videos or communication software such as WhatsApp, Slack, Zoom, or Google Meet. You just need to press a button and the system verifies if the audio – recorded, or live – is genuine or AI-generated. Surf said it will also add AI image detection to the browser's toolkit in the future.

Deepfakes are a growing problem

Deepfakes, which use AI to create convincing fake audio or video, are a rising threat.

Just this week, researchers at the BBC unearthed deepfake audio clips of David Attenborough that sound indistinguishable from the famous presenter's own voice. Various websites and YouTube channels are using the deepfake to get him to say things – about Russia, about the US election – that he never said.

This is just the tip of an ugly iceberg. Deepfakes have been used to enable large-scale fraud, incite political unrest through fake news, and destroy reputations by creating false or harmful content.

Surf said it launched the new deepfake detector to help protect enterprises, media organisations, police, and militaries around the world from the growing risk of AI cloning.

However, battling deepfakes is a continuous battle between humans using machines for good, against other humans using machines for nefarious means.

“AI voice cloning software becomes more capable by the day,” admitted Yankowitz. “So like all of cybersecurity, we are committing to winning an ever-evolving arms race.”

Surf expects to release the full version of its deepfake detector early next year.